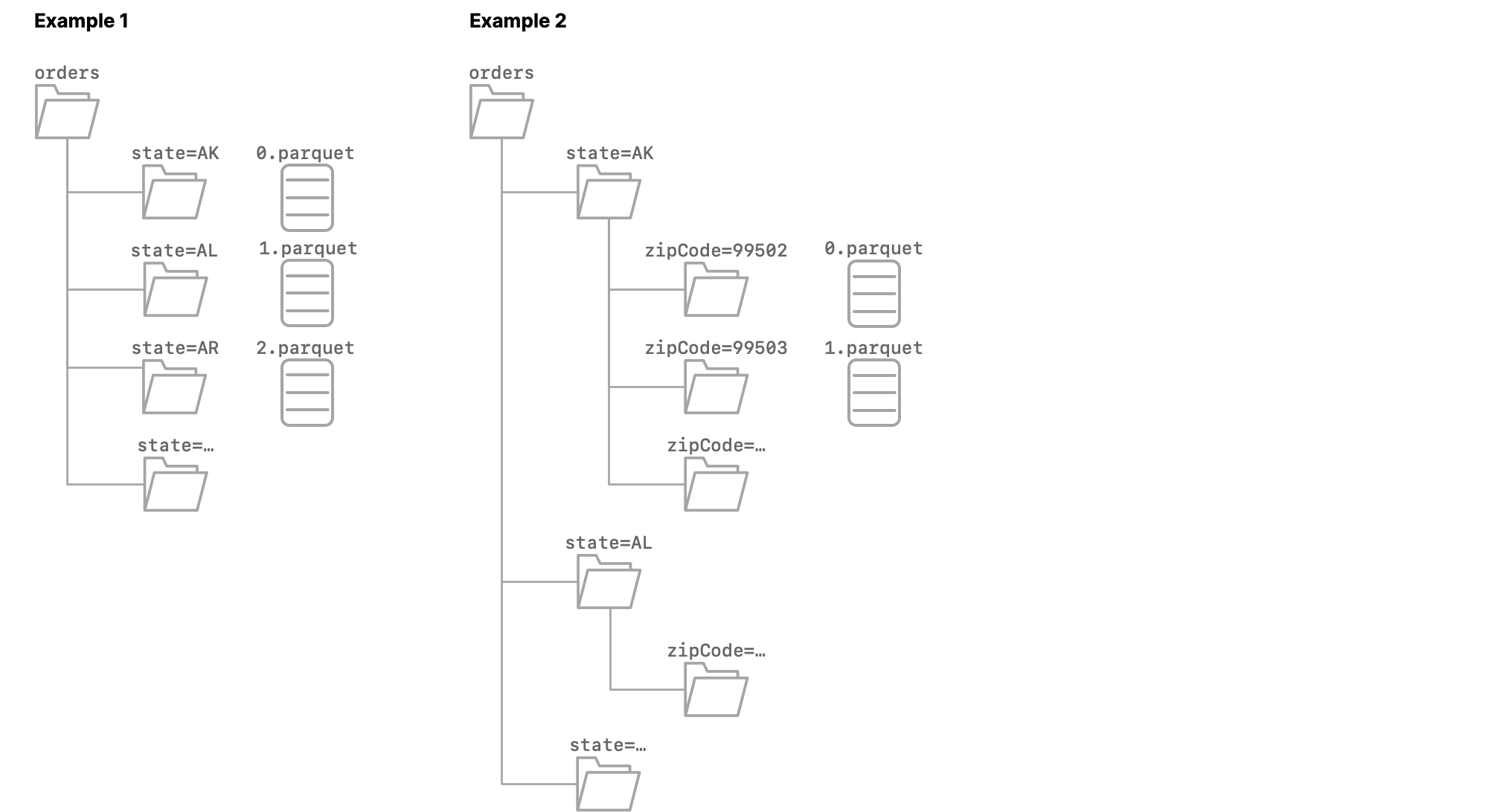

Add support for adding partitions as columns for parquet (and CSV files) · Issue #7744 · pola-rs/polars · GitHub

apache spark - Partition column is moved to end of row when saving a file to Parquet - Stack Overflow

Create a Big Data Hive/Parquet table with a partition based on an existing KNIME table and add more partitions later – KNIME Community Hub

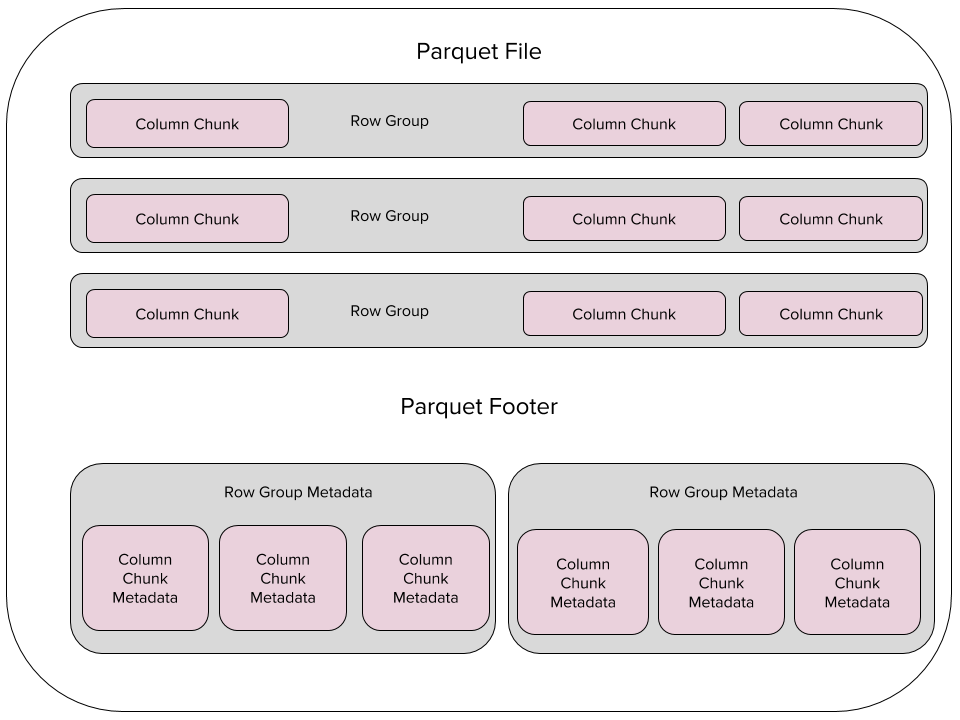

Python and Parquet performance optimization using Pandas, PySpark, PyArrow, Dask, fastparquet and AWS S3 | Data Syndrome Blog

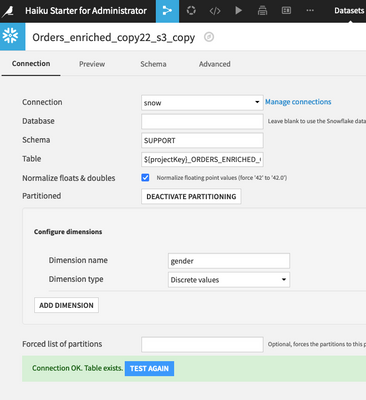

Re: Partition Redispatch S3 parquet dataset using column - how to run optimally? - Dataiku Community

Mo Sarwat on Twitter: "Parquet is a columnar data file format optimized for analytical workloads. Developers may also use parquet to store spatial data, especially when analyzing large scale datasets on cloud